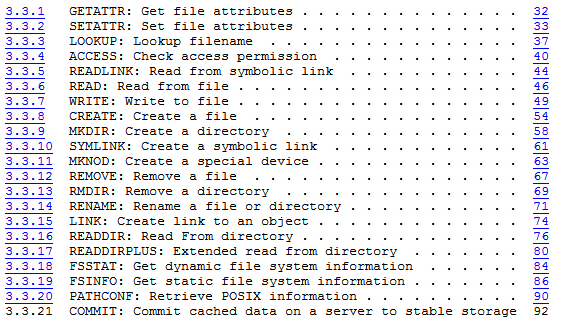

- Part 1: Intro

- Part 2: Hardware, Protocols, and Platforms, Oh My!

- Part 3: File Systems

- Part 4: The Layer Cake

- Part 5: Summary

We’ve covered a lot of information over this series, some of it more easily consumable than others. Hopefully it has been a good walkthrough of the main differences between SAN and NAS storage, and presented in a little different way than you may have seen in the past.

I wanted to summarize the high points before focusing on a few key issues:

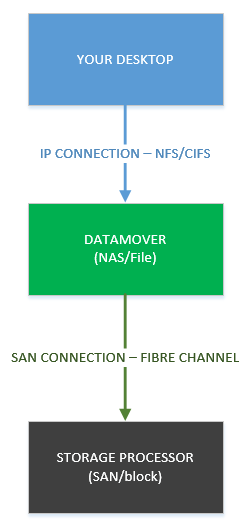

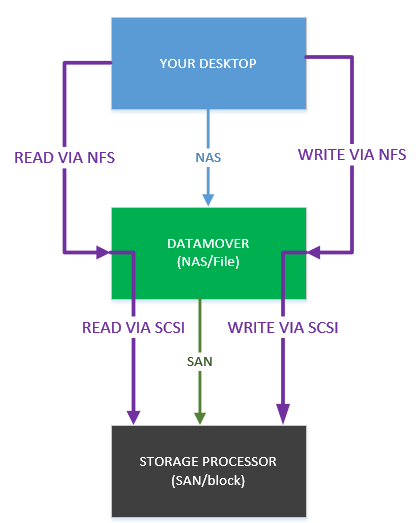

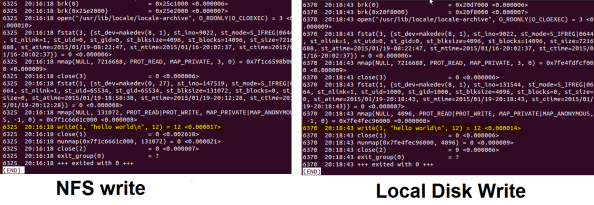

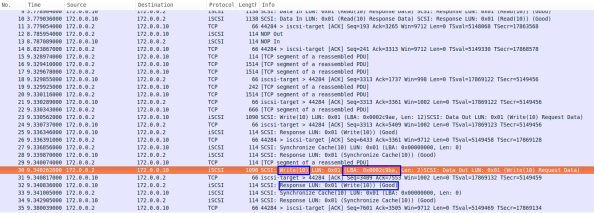

- SAN storage is fundamentally block I/O, which is SCSI. With SAN storage, your local machine “sees” something that it thinks is a locally attached disk. In this case your local machine manages the file system, and transmissions to the array are simple SCSI requests.

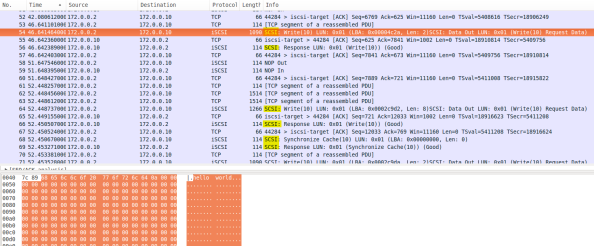

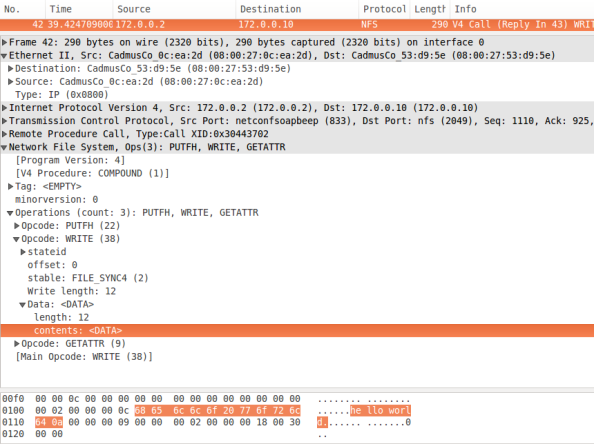

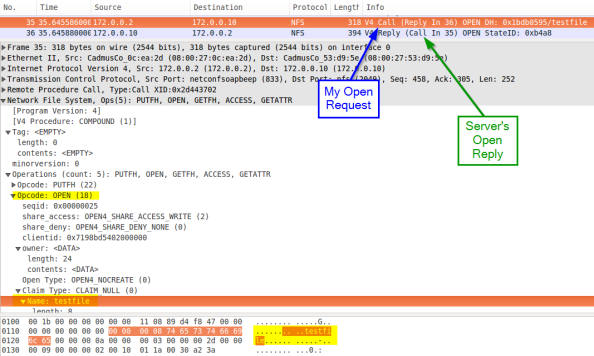

- NAS storage is file I/O, which is either NFS or CIFS. With NAS storage, your local machine “sees” a service to connect to on the network that provides file storage. The array manages the file system, and transmissions to the array are protocol specific file based operations.

- SAN and NAS have different strengths, weaknesses, and use cases

- SAN and NAS are very different from a hardware and protocol perspective

- SAN and NAS are sometimes only offered on specific array platforms

Our Question

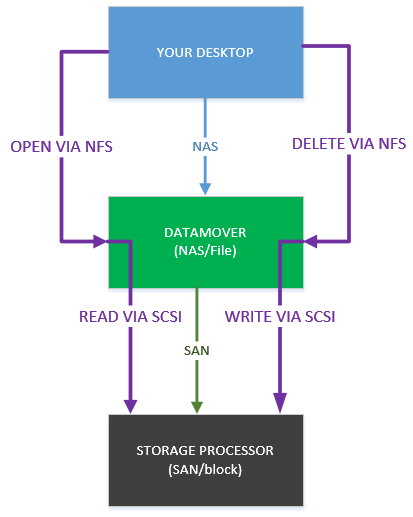

So back to our question that started this mess: with thin provisioned block storage, if I delete a ton of data out of a LUN, why do I not see any space returned on the storage array? We know now that this is because there is no such thing as a delete in the SAN/block/SCSI world. Thin provisioning works by allocating storage you need on demand, generally because you tried to write to it. However once that storage has been allocated (once the disk has been created), the array only sees reads and writes, not creates and deletes. It has no way of knowing that you sent over a bunch of writes that were intended to be a delete. The deletes are related to the file system, which is being managed by your server, not the array. The LUN itself is below the file system layer, and is that same disk address space filled with data we’ve been discussing. Deletes don’t exist on SAN storage, apart from administratively deleting an entire object – LUN, RAID set, Pool, etc.

With NAS storage on the other hand, the array does manage the file system. You tell it when to delete something by sending it a delete command via NFS or CIFS, so it certainly knows that you want to delete it. In this manner file systems allocations on NAS devices usually fluctuate in capacity. They may be using 50GB out of 100GB today, but only 35GB out of 100GB tomorrow.

Note: there are ways to reclaim space either on the array side with thin reclamation (if it is supported), or on the host side with the SCSI UNMAP commands (if it is supported). Both of these methods will allow you to reclaim some/all of the deleted space on a block array, but they have to be run as a separate operation from the delete itself. It is not a true “delete” operation but may result in less storage allocated.

Which Is Better?

Yep, get out your battle gear and let’s duke it out! Which is better? SAN vs NAS! Block vs File! Pistols at high noon!

Unfortunately as engineers a lot of times we focus on this “something must be the best” idea.

Hopefully if you’ve read this whole thing you realize how silly this question is, for the most part. SAN and NAS storage occupy different areas and cover different functions. Most things that need NAS functionality (many access points and permissions control) don’t care about SAN functionality (block level operations and utilities), and vice versa. This question is kind of like asking which is better, a toaster or a door stop? Well, do you need to toast some delicious bread or do you need to stop a delicious door?

In some cases there is overlap. For example, vSphere datastores can be accessed over block protocols or NAS (NFS). In this case what is best is most often going to be – what is the best fit in the environment?

- What kind of hardware do you have (or what kind of budget do you have)?

- What kind of admins do you have and what are their skillsets?

- What kind of functionality do you need?

- What else in the environment needs storage (i.e. does something else need SAN storage or NFS storage)?

- Do you have a need for RDMs (LUNs mapped directly from the array in order to expose some of the SCSI functionality)?

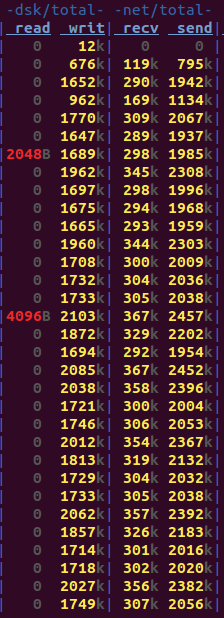

From a performance perspective 10Gb NFS and 10Gb iSCSI are going to do about the same for you, and honestly you probably won’t hit the limits of those anyway. These other questions are far more critical.

Which leads me to…

What Do I Need?

A pretty frequently asked question in the consulting world – what do I need, NAS or SAN? This is a great question to ask and to think about but again it goes back to what do you need to do?

Do you have a lot of user files that you need remote access to? Windows profiles or home directories? Then you probably need NAS.

Do you have a lot of database servers, especially ones that utilize clustering? Then you probably need SAN.

Truthfully, most organizations need some of both – the real question is in what amounts. This will vary for every organization but hopefully armed with some of the information in this blog series you are closer to making that choice for your situation.