A commenter on my post about RecoverPoint Journal Usage asks:

How can I tell if my journal is large enough for a consistency group? That is to say, where in the GUI will it tell me I need to expand my journal or add another journal lun?

This is an easy question to answer but for me this is another opportunity to re-iterate journal behavior. Scroll to the end if you are in a hurry.

Back to Snapshots…

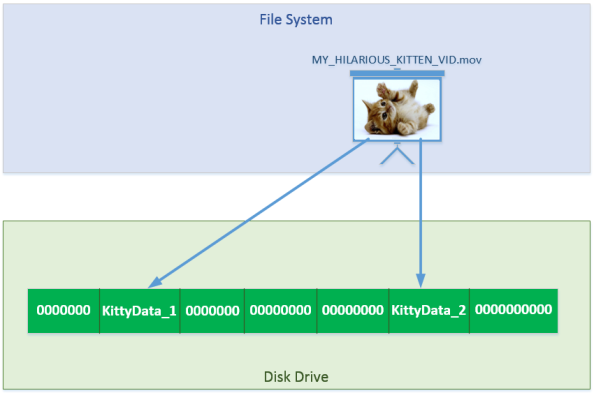

Back to our example in the previous article about standard snapshots – on platforms where snapshots are used you often have to allocate space for this purpose…like with SnapView on EMC VNX and Clariion, you have to allocate space via a Reserve LUN pool. On NetApp systems this is called the snapshot reserve.

Because of snapshot behavior (whether Copy On First Write or Redirect On Write), at any given time I’m using some variable amount of space in this area that is related to my change rate on the primary copy. If most of my data space on the primary copy is the same as when I began snapping, I may be using very little space. If instead I have overwritten most of the primary copy, then I may be using a lot of space. And again, as I delete snapshots over time this space will free up. So a potential set of actions might be:

- Create snapshot reserve of 10GB and create snapshot1 of primary – 0% reserve used

- Overwrite 2.5GB of data on primary – 25% reserve used

- Create snapshot2 of primary and overwrite a different 2.5GB of data on primary – 50% reserve used

- Delete snapshot1 – 25% reserve used

- Overwrite 50GB of data – snapshot space full (probably bad things happen here…)

There is meaning to how much space I have allocated to snapshot reserve. I can have way too much (meaning my snapshots only use a very small portion of the reserve) and waste a lot of storage. Or I can have too little (meaning my snapshots keep overrunning the maximum) and probably cause a lot of problems with the integrity of my snaps. Or it can be just right, Goldilocks.

RP Journal

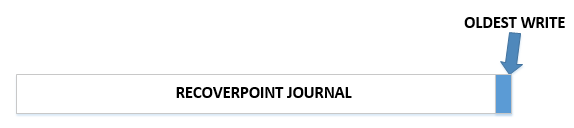

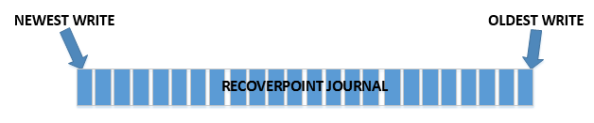

Once again the RP journal does not function like this. Over time we expect RP journal utilization to be at 100%, every time. If you don’t know why, please read my previous post on it!

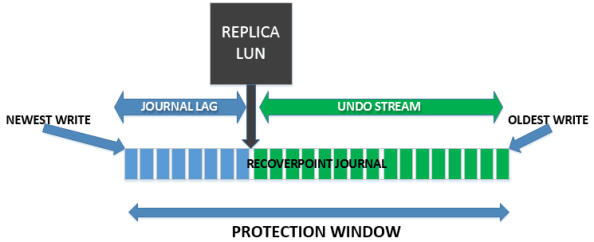

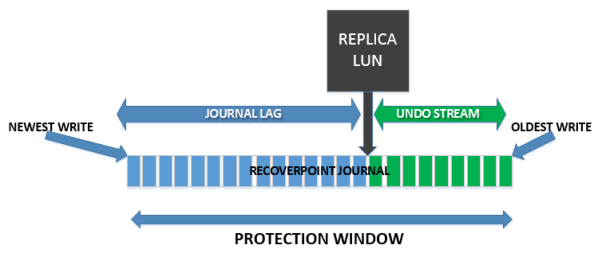

The size of the journal only defines your protection window in RP. The more space you allocate, the longer back you are able to recover from. However, there is no such thing as “too little” or “too much” journal space as a rule of thumb – these are business defined goals that are unique to every organization.

I may have allocated 5GB of journal space to an app, and that lets me recover 2 weeks back because it has a really low write rate. If my SLA requires me to recover 3 weeks back, that is a problem.

I may have allocated 1TB of journal space to an app, and that lets me recover back 30 minutes because it has an INSANE write rate. If my SLA only requires me to recover back 15 minutes, then I’m within spec.

RP has no idea about what is good journal sizing or bad journal sizing, because this is simply a recovery time line. You must decide whether it is good or bad, and then allocate additional journals as necessary. Unlike other technology like snapshots, there is no concept of “not enough journal space” beyond your own personal SLAs. In this manner, by default RecoverPoint won’t let you know that you need more journal space for a given CG because it simply can’t know that.

Note: if you are regularly using the Test A Copy functionality for long periods of time (even though you really shouldn’t…), then you may run into sizing issues beyond just protection windows, as portions of the journal space are also used for that. This is beyond the scope of this post, but just be aware that even if you are in spec from a protection window standpoint, you may need more journal space to support the test copy.

Required Protection Window

So RecoverPoint has no way of knowing whether you’ve allocated enough journal space to a given CG. Folks on the pre-sales side have some nifty tools that can help with journal sizing by looking at data change rate, but this is really for the entire environment and hopefully before you bought it.

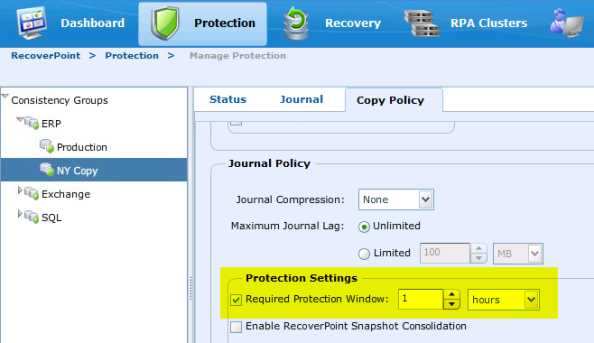

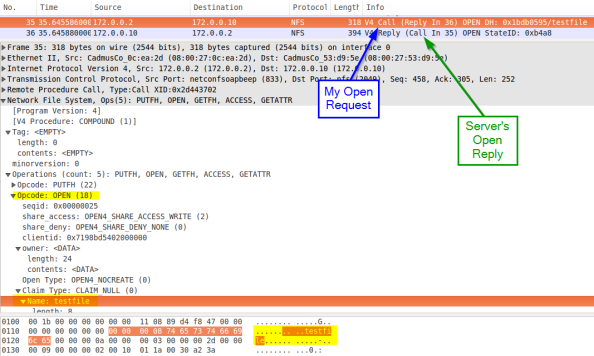

Luckily, RecoverPoint has a nice internal feature to alert you whether a given Consistency Group is within spec or not, and that is “Required Protection Window.” This is a journal option within each copy and can be configured when a CG is created, or modified later. Here is a pic of a CG without it. Note that you can still see your current protection window here and make adjustments if you need.

Here is where the setting is located.

And here is what it looks like with the setting enabled.

So if I need to recover back 1 hour on this particular app, I set it to 1 hour and I’m good. If I need to recover back 24 hours, I set it that way and it looks like I need to allocate some additional journal space to support that.

Now this does not control behavior of RecoverPoint (unlike, say, the Maximum Journal Lag setting) – whether you are within or under your required protection window, RP still functions the same. It simply alerts you that you are under your personally defined window for that CG. And if you are under for too long, or maybe under it at all if it is a mission critical application, you may want to add additional journal space to extend your protection window so that you are within spec. Again I repeat, this is only an alerting function and will not, by itself, do anything to “fix” protection window problems!

Summary

So bottom line: RP doesn’t – or more accurately can’t – know whether you have enough journal space allocated to a given CG because that only affects how long you can roll back for. However, using the Required Protection Window feature, you can tell RP to alert you if you go out of spec and then you can act accordingly.