- Part 1: Intro

- Part 2: Hardware, Protocols, and Platforms, Oh My!

- Part 3: File Systems

- Part 4: The Layer Cake

- Part 5: Summary

In the last blog post, we asked a question: “who has the file system?” This will be important in our understanding of the distinction between SAN and NAS storage.

First, what is a file system? Simply (see edit below!), a file system is a way of logically sorting and addressing raw data. If you were to look at the raw contents of a disk, it would look like a jumbled mess. This is because there is no real structure to it. The file system is what provides the map. It lets you know that block 005A and block 98FF are both the first parts of your text file that reads “hello world.” But on disk it is just a bunch of 1’s and 0’s in seemingly random order.

Edit: Maybe I should have chosen a better phrase like “At an extremely basic level” instead of “Simply.” 🙂 As @Obdurodon pointed out in the comments below, file systems are a lot more than a map, especially these days. They help manage consistency and help enable cool features like snapshots and deduplication. But for the purposes of this post this map functionality is what we are focusing on as this is the relationship between the file system and the disk itself.

File systems allow you to do things beyond just reads and writes. Because they form files out of data, they let you do things like open, close, create, and delete. They allow you the ability to keep track of where your data is located automatically.

(note: there are a variety of file systems depending on the platform you are working with, including FAT, NTFS, HFS, UXFS, EXT3, EXT4, and many more. They have a lot of factors that distinguish them from one another, and sometimes have different real world applications. For the purposes of this blog series we don’t really care about these details.)

Because SAN storage can be thought of as a locally attached disk, the same applies here. The SAN storage itself is a jumbled mess, and the file system (data map) is managed by the host operating system. Similar to your local C: drive in your windows laptop, your OS puts down a file system and manages the location of the block data. Your system knows and manages the file system so it interacts with the storage array at a block level with SCSI commands, below the file system itself.

With NAS storage on the other hand, even though it may appear the same as a local disk, the file system is actually not managed by your computer – or more accurately the machine the export/share is mounted on. The file system is managed by the storage array that is serving out the data. There is a network service running that allows you to connect to and interact with it. But because that remote array manages the file system, your local system doesn’t. You send commands to it, but not SCSI commands.

With SAN storage, your server itself manages the file system and with NAS storage the remote array manages the file system. Big deal, right? This actually has a MAJOR impact on functionality.

I set up a small virtual lab using VirtualBox with a CentOS server running an NFS export and an iSCSI target (my remote server), and a Ubuntu desktop to use as the local system. After jumping through a few hoops, I got everything connected up. All commands below are run and all screenshots are taken from the Ubuntu desktop.

I’ll also take a moment to mention how awesome Linux is for these type of things. It took some effort to get things configured, but it was absolutely free to set up a NFS/iSCSI server and a desktop to connect to it. I’ve said it before but will say it again – learn your way around Linux and use it for testing!

So remember, who has the file system? Note that with the iSCSI LUN, I got a raw block device (a.k.a. a disk) presented from the server to my desktop. I had to create a partition and then format it with EXT4 before I could mount it. With the NFS export, I just mounted it immediately – no muss no fuss. That’s because the file system is actually on the server, not on my desktop.

Now, if I were to unmount the iSCSI LUN and then mount it up again (or on a different linux desktop) I wouldn’t need to lay down a file system but that is only because it has already been done once. With SAN storage I have to put down a file system on the computer it is attached to the first time it is used, always. With NAS storage, there is no such need because the file system is already in place on the remote server or array.

Let’s dive in and look at the similarities and differences depending on where the file system is.

Strace

First let’s take a look at strace. strace is a utility that exposes some of the ‘behind the scenes’ activity when you execute commands on the box. Let’s run this command against a data write via a simple redirect:

strace -vv -Tt -f -o traceout.txt echo “hello world” > testfile

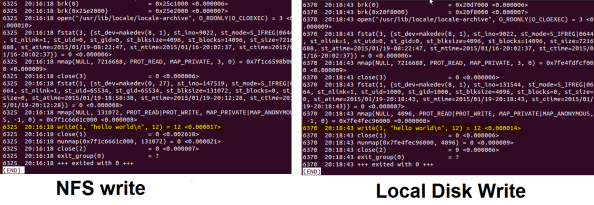

Essentially we are running strace with a slew of flags against the command [ echo “hello world” > testfile ]. Here is a screenshot of the relevant portion of both outputs when I ran the command with testfile located on the NFS export vs the local disk.

Okay there is a lot of cryptic info on those pics, but notice that in both cases the write looks identical. The “things” that are happening in each screenshot look the same. This is a good example of how local and remote I/O “appears” the same, even at a pretty deep level. You don’t need to specify that you are reading or writing to a NAS export, the system knows what the final destination is and makes the necessary arrangements.

Dstat

Let’s try another method – dstat. Dstat is a good utility for seeing the types of I/O running through your system. And since this is a lab system, I know it is more or less dead unless I’m actively doing something on it.

I’m going to run a large stream of writes (again, simple redirection) in various locations (one location at a time!) while I have dstat running in order to see the differences. The command I’m using is:

for i in {1..100000}; do echo $i > myout; done

With myout located in different spots depending on what I’m testing.

For starters, I ran it against the local disk:

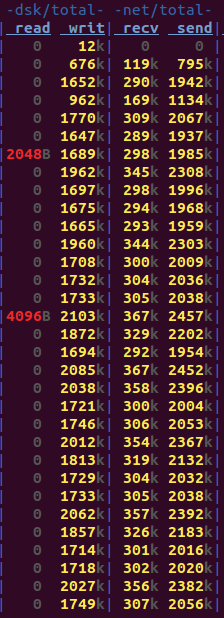

Note the two columns in the center indicating “dsk” traffic (I/O to a block device) and “net” traffic (I/O across the network interfaces). You can think of the “dsk” traffic as SCSI traffic. Not surprisingly, we have no meaningful network traffic, but sustained block traffic. This makes sense since we are writing to the local disk.

Next, I targeted it at the NFS export.

A little different this time, as even though I’m writing to a file that appears in the filesystem of my local machine (~/mynfs/myout) there is no block I/O. Instead we’ve got a slew of network traffic. Again this makes sense because as I explained even though the file “appears” to be mine, it is actually the remote server’s.

Finally, here are writes targeted at the iSCSI LUN.

Quite interesting, yes? We have BOTH block and network traffic. Again this makes sense. The LUN itself is attached as a block device, which generates block I/O. However, iSCSI traffic travels over IP, which hits my network interfaces. The numbers are a little skewed since the block I/O on the left is actually included in the network I/O on the right.

So we are able to see that something is different depending on where my I/O is targeted, but let’s dig even deeper. It’s time to…

WIRESHARK!

For this example, I’m going to run a redirect with cat:

cat > testfile

hello world

ctrl+c

This is simply going to write “hello world” into testfile.

After firing up wireshark and making all the necessary arrangements to capture traffic on the interface that I’m using as an iSCSI initiator, I’m ready to roll. This will allow me to capture network traffic between my desktop and server.

Here are the results:

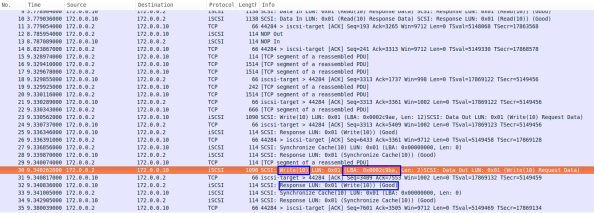

There is a lot of stuff on this pic as expected, but notice the write command itself. It is targeted at a specific LBA, just as if it were a local disk that I’m writing to. And we get a response from the server that the write was successful.

Here is another iSCSI screenshot.

I’ve highlighted the write and you can see my “hello world” in the payload. Notice all the commands I highlighted with “SCSI” in them. It is clear that this is a block level interaction with SCSI commands, sent over IP. Note also that in both screenshots, there is no file interaction.

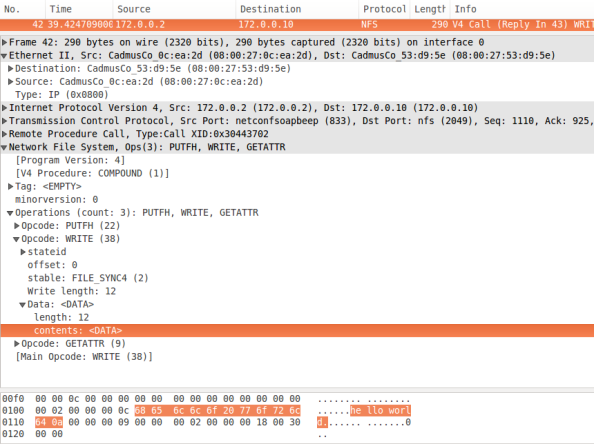

Now let’s take a look at the NFS export on my test server. Again I’m firing up wireshark and we’ll do the same capture operation on the interface I’m using for NFS. I’m using the same command as before.

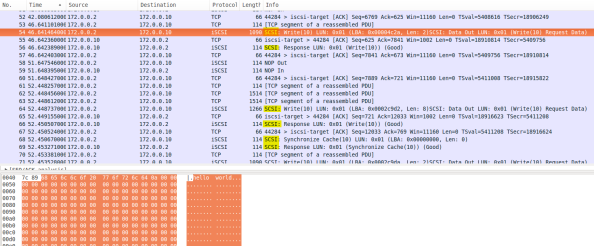

Here is the NFS write command with my data. There are standard networking headers and my hello world is buried in the payload. Not much difference from iSCSI, right?

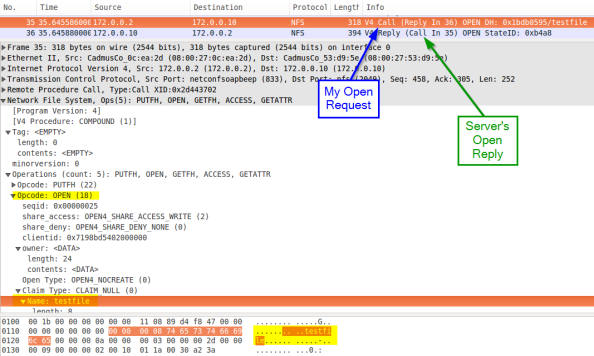

The difference is a few packets before:

We’ve got an OPEN command! I attempt to open the file “testfile” and the server responds to my request like a good little server. This is VERY different from iSCSI! With iSCSI we never had to open anything, we simply sent a write request for a specific Logical Block Address. With iSCSI, the file itself is opened by the OS because the OS manages the file system. With NFS, I have to send an OPEN to the NAS in order to discover the file handle, because my desktop has no idea what is going on with the file system.

This is, I would argue, THE most important distinction between SAN and NAS and hopefully I’ve demonstrated it well enough to be understandable. SAN traffic is SCSI block commands, while NAS traffic is protocol-specific file operations. There is also some overlap here (like read and write), but these are still different entities with different targets. We’ll take a look at the protocols and continue discussing the layering effect of file systems in Part 4.

Nice article, but (as a file system developer) I’d say that describing a file system in terms of mapping file blocks to disk blocks can be misleading. Even “at rest” a file system adds important structure and semantics – hierarchical directories, regular and extended attributes, and so on. A file system’s *dynamic* behavior can be even more important. The permissions model is like nothing that exists at the block level. Ditto for guarantees (or too often lack thereof) about consistency, durability, ordering, etc. A major piece of any file system nowadays is the journal – or alternatively the copy-on-write tree in ZFS or btrfs – which is key to providing those guarantees along with decent performance. Thinking of the file system as just a space allocator, and especially assuming that the space thus allocated will have the same behavior as raw disk blocks, is how a lot of applications end up not being able to survive a crash with their data intact. Perhaps a good followup to this post would be one that highlights some of these differences between life at the block and file level.

Great points. I think this may have been my poor word choice of “Simply”…I’ve inserted an edit to hopefully clear things up. File systems are in no way, shape, or form simple. 🙂 In subsequent posts of this series I’m going to scratch the surface of some of what you are talking about – the actual relationship between what exists in the file system and the disk, and some consistency issues regarding host file buffers and concurrent access – but certainly won’t hit the depth that I’m sure you see things at. But definitely keep me honest here! I’m always happy to learn something new, or think about things differently.