In this post we are going to configure a local consistency group within XtremIO, armed with our knowledge of the CG settings. I want to configure one snap per hour for 48 hours, 48 max snaps.

Because I’m working with local protection, I have to have the full featured licensing (/EX) instead of the basic (/SE) that only covers remote protection. Note: these licenses are different than normal /SE and /EX RP licenses! If you have an existing VNX with standard /SE, then XtremIO with /SE won’t do anything for you!

I have also already configured the system itself, so I’ve presented the 3GB repository volume, configured RP, and added this XtremIO cluster into RP.

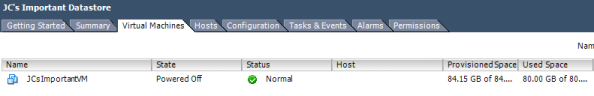

All that’s left now is to present storage and protect! I’ve got a 100GB production LUN I want to protect. I have actually already presented this LUN to ESX, created a datastore, and created a very important 80GB Eager Zero Thick VM on it.

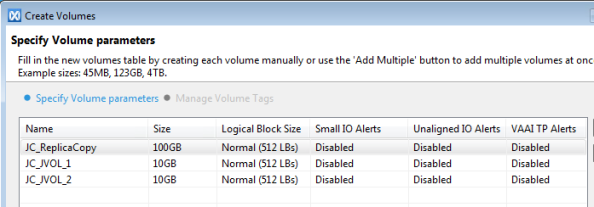

First thing’s first, I need to create a replica for my production LUN – this must be the exact same size as the production LUN, although again this is always my recommendation with RP anyway. I also need to create some journal volumes as well. Because this isn’t a distributed CG, I’ll be using the minimum 10GB sizing. Lucky for us creating volumes on XtremIO is easy peasy. Just a reminder – you must use 512 byte blocks instead of 4K, but you are likely using that already anyway due to lack of 4K support.

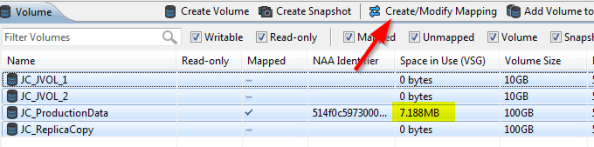

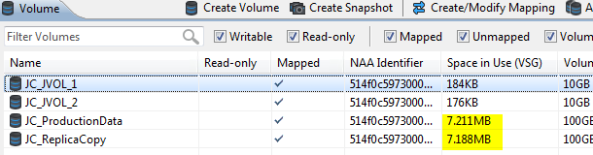

Next I need to map the volume. If you haven’t seen the new volume screen in XtremIO 4.0, it is a little different. Honestly I kind of like the old one which was a bit more visual but I’m sure I’ll come to love this one too. I select all 4 volumes and hit the Create/Modify Mapping button. Side note: notice that even though this is an Eager Zero’d VM, there is only 7.1MB used on the volume highlighted below. How? At first I thought this was the inline deduplication, but XtremIO does a lot of cool things, and one neat thing it does is discard all ful-zero block writes coming into the box! So EZTs don’t actually inflate your LUNs.

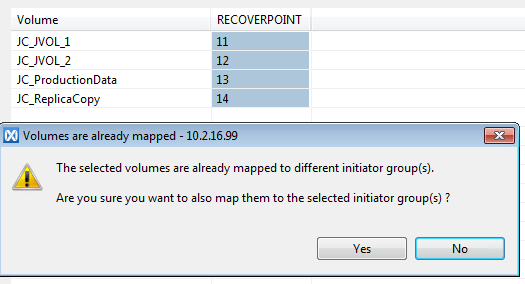

Next I choose the Recoverpoint Initiator group (the one that has ALL my RP initiators in it) and map the volume. LUN IDs have never really been that important when dealing with RP, although in remote protection it can be nice to try to keep the local and remote LUN IDs matching up. Trying to make both host LUN IDs and RP LUN ID match up is a really painful process, especially in larger environments, for (IMO) no real benefit. But if you want to take up that, I won’t stop you Sysyphus!

Notice I also get a warning because it recognizes that the Production LUN is already mapped to an existing ESX host. That’s OK though, because I know with RP this is just fine.

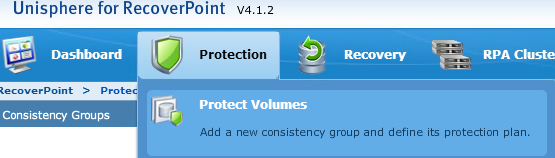

Alright now into Recoverpoint. Just like always I go into Protection and choose Protect Volumes.

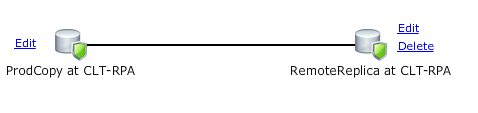

These screens are going to look pretty familiar to you if you’ve used RP before. On this one, for me typically CG Name = LUN name or something like it, Production name is ProdCopy or something similar, and then choose your RPA cluster. Just like always, it is EXTREMELY important to choose the right source and destinations, especially with remote replication. RP will happily replicate a bunch of nothing into your production LUN if you get it backwards! I choose my prod LUN and then I hit modify policies.

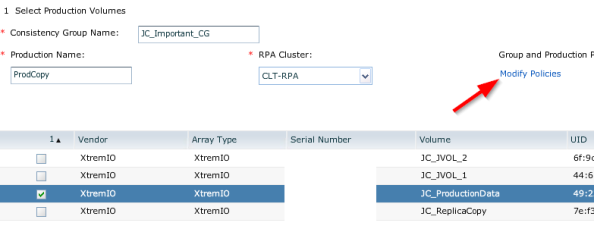

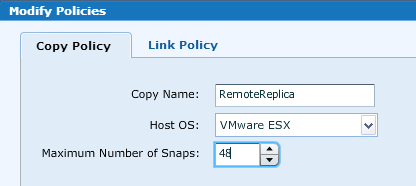

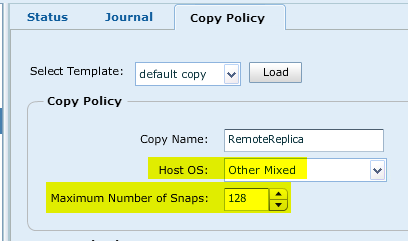

In modify policy, like normal I choose the Host OS (BTW I’ll happily buy a beer for anyone who can really tell me what this setting does…I always set it but have no idea what bearing it really has!) and now I set the maximum number of snaps. This setting controls how many total snapshots the CG will maintain for the given copy. If you haven’t worked with RP before this can be a little confusing because this setting is for the “production copy” and then we’ll set the same setting for the “replica copy.” This allows you to have different settings in a failover situation, but most of the time I keep these identical to avoid confusion. Anywho, we want 48 max snaps so that’s what I enter.

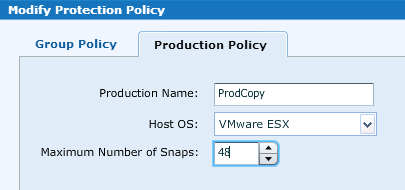

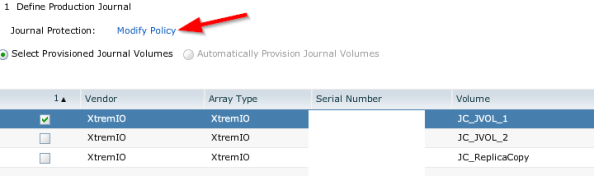

I hit Next and now deal with the production journal. As usual I select that journal I created and then I hit modify policy.

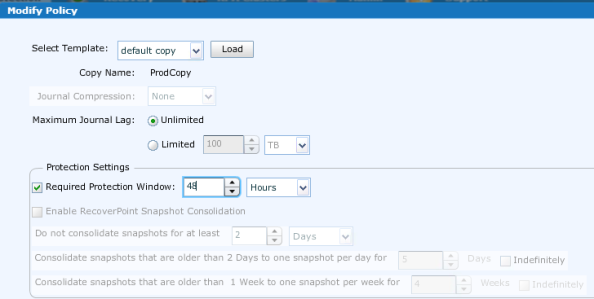

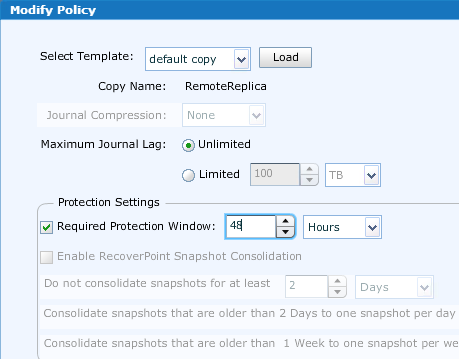

More familiar settings here, and because I want a 48 hour protection window, that’s what I set. Again based on my experience this is an important setting if you only want to protect over a specific period of time…otherwise it will spread your snaps out over 30 days. Notice that snapshot consolidation is greyed out – you can’t even set it anymore. That’s because the new snapshot pruning policy has effectively taken its place!

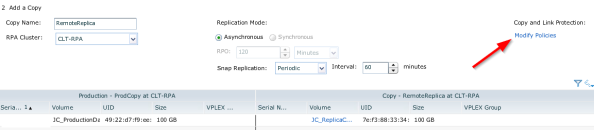

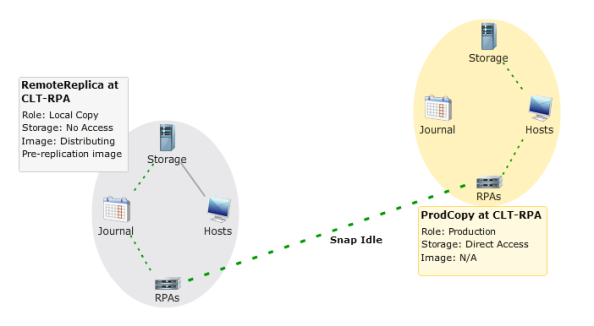

After hitting next, now I choose the replica copy. Pretty standard fare here, but a couple of interesting items in the center – this is where you configure the snap settings. Notice again that there is no synchronous replication; instead you choose periodic or continuous snaps. In our case I choose periodic and a rate of one per 60 minutes. Again I’ll stress, especially in a remote situation it is really important to choose the right RPA cluster! Naming your LUNs with “replica” in the name helps here, since you can see all volume names in Recoverpoint.

In modify policies again we set that host OS and a max snap count of 48 (same thing we set on the production side). Note: don’t skip over the last part of this post where I show you that sometimes this setting doesn’t apply!

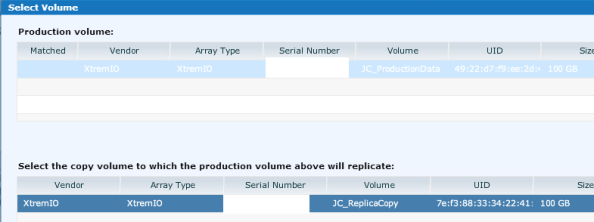

In case you haven’t seen the interface to choose a matching replica, it looks like this. You just choose the partner in the list at the bottom for every production LUN in the top pane. No different from normal RP.

Next, we choose the replica journal and modify policies.

Once again setting the required protection window of 48 hours like we did on the production side.

Next we get a summary screen. Because this is local it is kind of boring, but with remote replication I use this opportunity to again verify that I chose the production site and the remote site correctly.

After we finish up, the CG is displayed like normal, except it goes into “Snap Idle” when it isn’t doing anything active.

One thing I noticed the other day (and why I specifically chose these settings for this example) is that for some reason the replica copy policy settings aren’t getting set correctly sometimes. See here, right after I finished up this example the replica copy policy OS and max snaps aren’t what I specified. The production is fine. I’ll assume this is a bug until told otherwise, but just a reminder to go back through and verify these settings when you finish up. If they are wrong you can just fix them and apply.

Back in XtremIO, notice that the replica is now (more or less) the same size as the production volume as far as used space. Based on my testing this is because the data existed on the prod copy before I configured the CG. If I configure the CG on a blank LUN and then go in and do stuff, nothing happens on the replica LUN by default because it isn’t rolling like it use to. Go snaps!

I’ll let this run for a couple of days and then finish up with a production recovery and a summary.